Before understanding the concept of edge computing, cloudlets and fog computing, we need to understand what cloud computing is and why is it needed. Cloud computing provides computing services on-demand over the internet. Cloud computing’s backbone is datacenter which does storage and computation of data, multiple datacenters are connected through optical network to form a data center network (DCN). Cloud computing has gained popularity among organisations due to:

1) Self service capability- No need to keep hardware and software maintenance staff.

2) Scalability-Its easier for organisation to expand. They can use infrastructure according to there requirement in case of need to expand they can request for more infrastructure from the cloud provider.

3) Flexibility-As the access to cloud services is available over internet, we can access it from anywhere.

4) Affordability-It saves considerable amount of money for organisations as they won’t have to buy million-dollar servers.

Now with Internet of things (IOT) being widely used, it has a new set of requirements, which are:

1). Realtime processing of sensor data, the communication latency between end devices and DCN might prove to be bottleneck.

2). As most of IOT devices have agile nature, mobility support is necessary.

Edge computing is an attempt which over come these shortfalls, it leverages the storage and computation capacity of large number of mobile, wearable devices or sensors. It acts as a layer between end devices and cloud.

As these “Edge devices” handle requests locally rather than sending it to cloud, this reduces the latency in resolving the requests and allows real-time handling of the requests. Also due to abundance availability of edge devices it supports mobility [1]. We can say Edge computing also known as edge, helps in bringing the processing close to the data store thus eliminating the need to send data to remote cloud or server for processing.

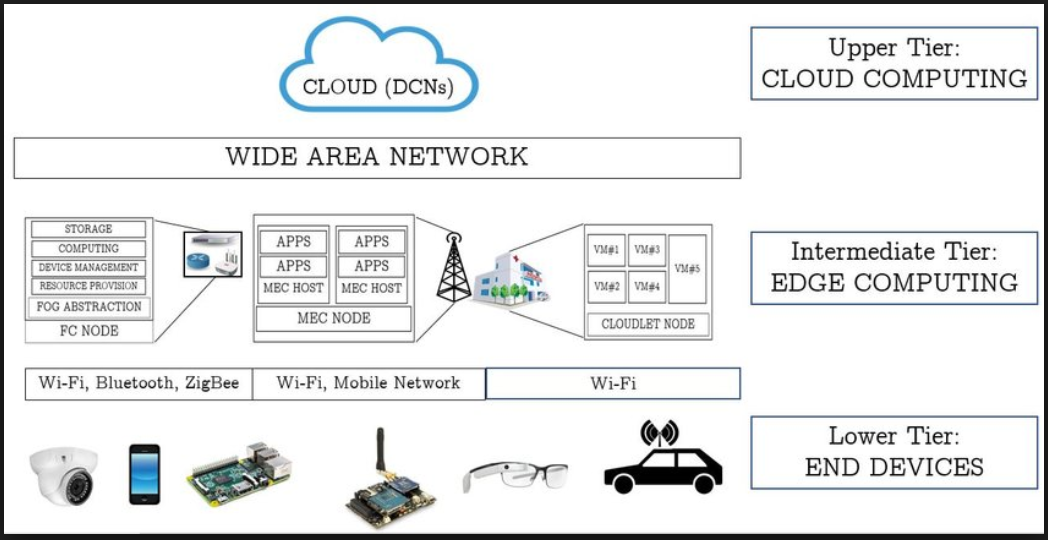

Fig 1: N-tier Architecture for Edge Computing Paradigm [1]

This implementation of edge layer can be classified as Mobile Edge Computing (MEC), Fog Computing (FC) and Cloudlet Computing (CC).

M. Satyanarayana coauthored an article with Paramvir Bahl, Rámon Cáceres, and Nigel Davies that laid the conceptual foundation for edge computing. They presented a two-level architecture, in which the first level represents present day cloud infrastructure and second level is dispersed elements called cloudlets which have state cached from first level clouds. This cloudlet concept can be expanded to multilevel cloudlet hierarchy. Flavio Bonomi and his colleagues in 2012 introduced the term fog computing to refer dispersed cloud infrastructure, where data is cached from main data server [1]. Also, we can say, one of the standards to implement edge computing is called fog computing, it helps for computation, storage and networking between the devices and centralised cloud data center. Fog extends the cloud infrastructure close to devices that produce and act on IoT data. Fog nodes can be deployed anywhere within the network, with data producing devices. We can say any device which can compute, store and has network connectivity can be called fog node [1].

Fig 2: Representation of fog infrastructure [2].

Cloudlet is a sub part of a cloud that is placed close to end nodes, it has data cached from the cloud, thus helps in reducing time to fetch data from main cloud. Also, we can define Cloudlet as a resource rich system placed close to mobile devices and connected through internet.

Proximity of cloudlet matters because it helps us achieving goals like [4]:

- Low end to end latency

- Scalability: As only exact information is transmitted after analysis.

- Privacy- As privacy policy can be enforced prior to release of data to cloud.

- Cloud failure protection-In case cloud services become unavailable, a fallback service of nearby cloudlet can mask the failure.

Difference:

|

S.No |

Edge Computing |

Fog computing |

Cloudlet |

|

|

1 |

Introduced by |

ETSI |

Cisco |

Prof. Mahadev S |

|

2 |

Main aim was |

Build it as stepping stone towards 5G |

IOT and Wireless sensor and actuator network (WSAN) |

Distribution of cloud services |

Edge Computing and CDN:

The growth of internet has truly transformed the business all over the world, saying this the speed, availability and integrity of network is very crucial for every stakeholder. Before Content delivery network (CDN) was implemented every request was send to main web server to be processed, this in turn increased latency. Also, at times when multiple requests were sent to a particular website, due to congestion affecting its availability. In order to overcome this problem, accelerating the web performance CDN was suggested as a solution, it was introduced by Akamai in late 1990s [4] [3]. CDN is network that supports caching of main server data to multiple CDN servers placed close to the end users, thus reducing latency. CDN’s have helped in achieving a better Quality of Service (QoS) and Quality of Experience (QoE) to their customers. These CDN nodes placed closed to users with prefetched, cached data reduce latency to a great extend and video caching saves a lot bandwidth [4]. Also, these nodes can help in building location relevant advertising, thus helping advertisers to reach the relevant audience. Over the years CDN have increased in number and size, over a third of internet traffic is being served by CDN’s, it is expected to carry over a half in near future [5].

Find Out How UKEssays.com Can Help You!

Our academic experts are ready and waiting to assist with any writing project you may have. From simple essay plans, through to full dissertations, you can guarantee we have a service perfectly matched to your needs.

View our academic writing services

Edge computing shares roots with CDN, as mentioned earlier back in late 1990’s when akamai introduced CDN to accelerate web performance, it uses nodes close to the end user. Edge computing extends the same concept by leveraging the cloud infrastructure. For both the proximity to end user is crucial being just limited to caching web, where as cloudlet run arbitrary code just as in cloud computing.

Similarities:

1) . Both CDN and Edge computing are concepts build around bringing nodes close to user edge.

2). Both help in reducing latency.

3). Both Reduce load at main server by distribution of traffic.

4). In both concepts the proximity of node to end users is crucial.

Differences:

1). In CDN just caching of contents is done, whereas in edge computing cloudlets can run an arbitrary code just as in cloud computing, so we can say edge computing is extension to cloud computing.

2).

Apart from connectivity network service providers also provide network functions like network address translation (NAT), Firewall, Encryption, DNS and caching. Initially all these functions were hardware embedded at customer side. So, bringing new technologies in this architecture was extremely difficult as hardware appliances held a proprietary nature, it was difficult to maintain it, it required professional support to this, and new implementations needed hardware changes too. So overall the problem with this architecture was:

- High Cost

- Difficult to upgrade

Service providers began exploring new ways to do so, which laid stone to foundation of Network function virtualization (NFV). NFV decouples hardware and functions and transfers these functions to virtual server.

There are many benefits of doing NFV:

- Less costly to implement

- Easy to upgrade and add new functions

- More scalable and flexible

- Reduces time to market for new implementations

The functions virtualised inside a virtual server are called virtual network functions. The functions like intrusion detection system, load balancer and firewalls which were earlier implemented inside hardware of a system now are being implemented inside virtual server.

A VNF is the NFV software instance consisting of a number or portion of VMs running various network function processes.

2.

Content delivery networks (CDN’s) currently server more a third of all internet traffic and is expected to increase at higher rate and cover over a 71% of internet traffic according to Cisco Visual Networking Index (2016-2021), most of the traffic will be IP video traffic therefore it becomes essential to meet the requirement of growing users by distributing the content in efficient manner [1] [8].Video contents can be transcoded and decoded but this process needs lot of computation capabilities

Researchers have been looking at the potential of CDN for developing large scale video transcoding platform. Also, NFV is gaining attention in many research fields, CDN slicing being one them [4] [16][8]. In virtual CDN slices, transcoding servers are considered as VNF that are hosted in virtual machines and can be instantiated in different cloud domains. There many factors like Quality of Experience according to which optimization of cost associated with deployment of virtual transcoders.

CDN slicing with NFV, gives rise to extensive benchmark analysis to study the virtual transcoding behaviour in cloud infrastructure.

CDN as a Service (CDNaaS) is a VoD platform that focuses on creating CDN slices across multiple cloud domains and life cycle management. CDN slices have following components:

- Virtual transcoder

- Virtual streamers

- Virtual caches

- CDN-slice-specific condition

Every CDN slice has number of VNFs running on virtual machines hosted on a multiple cloud platform. They are administered by one coordinator that manages the different VNFs of slice and ensure that they can communicate effectively.

The main components of CDNaaS platform are:

- Orchestrator: The life-cycle of the CDN slice and its resources is managed and the actions like instantiation and termination of VNFs are performed by the owner of CDN slice by logging into the orchestrator.

- Slice-specific Coordinator: There is one Coordinator for every CDN slice also known as VNF Manager, it functions as its brain. It can help the owner to manage the uploaded videos and manage end-users and has access to the dashboard for monitoring the slice resource, content popularity and access statistics.

- Virtual cache: A CDN slice has a network of geographically dispersed cache servers. Each node caches and stores the content. A request by a user to watch a video in a desired resolution is fulfilled by cache servers nearest to the user, therefore reducing latency and increasing QoS.

- Virtual Transcoder: It helps in remote virtual transcoding. The transcoder server always listens to coordinators orders. It picks a video from a relevant cache server and informs the coordinator about the progress.

- Virtual streamer: It takes care of user requesting and load balancing i.e. redirecting to appropriate cache servers.

Proposed solution framework:

The solution to provide the management for transcoding high volume of media files is provided through this framework through heterogeneous transcoding nodes distributed over multiple cloud domains.

The architecture of proposed solution is mentioned below:

Knowledge base: It acts as database to manage and store the data. For CDNaaS, it focuses on building strong knowledge base by storing all the previous transcoding results and information.

Transcode Orchestrator (TO): Global optimization is major responsibility of this component, generally hosted on separate virtual servers.TO has two components that are KB and Balancer. Balancer is used to retrieve data from KB for generating machine learning predictive model. The main function of TO is to distribute the video uploaded to CDN slice to multiple VNF-Transcode nodes. Based on prediction it assigns transcoding jobs to nodes which can efficiently process the given job after proper utilization of given resources.TO server keeps track of performance of all nodes and assigns the jobs accordingly to optimise CPU and memory utilization and thus reduced transcoding time.

Scheduler: It is responsible for local optimization. Scheduler agent focuses on locally scheduling transcoding process for assigned video subset received from TO at node level. Along with it , it also defines local transcoding process and it will do prediction of local transcoding time.

Experimental evaluation:

The focus is to benchmark the performance of VNF-Transcoder based on FFEMPEG software by varying different parameters.

Input- Number of videos arrived and

- https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=8016213

- https://www.cisco.com/c/dam/en_us/solutions/trends/iot/docs/computing-overview.pdf

- https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=1250586

- the emergence of cloud

- using sdn and nfv

- https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=6968961

- https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7045396

- Performance benchmark

Cite This Work

To export a reference to this article please select a referencing style below: