People with disabilities such as Parkinson’s disease, multiple sclerosis or tetraplegia experience problems while using traditional wheelchair-integrated joystick due to deformities of their limbs, amputees, tremor or because the are paralyzed below the neck. For them, different methods to use wheelchair have been invented. Among them, the most popular are voice command, brain command, eye tracking, face tracking and tongue tracking. In this project, the main emphasis is put on interfaces based on eye-tracking, head gestures and users habits.

The eye methods are effective, but distracts patient’s view. The physiological background of Electro-Oculography is well understood among different eye movement research methods. Therefore, the report regarding this project is chosen to be described.

- voice command is too sensitive for the background noise, but has works quite fast.

- Tongue tracking is inconvenient, but less than eye tracking.

- Face tracking is sensitive for skin color, but quite efficient.

- Brain command is very successful, but difficult to adjust.

2.1. Eye-computer interfaces

The authors of [1] present an electrooculography (EOG) based wheelchair interface. Beyond simple path guiding, this device has three other features: path re-routing, obstacle sensing and avoidance, and tilt detection. It is also worth nothing that the microcontroller is used in the wheelchair system instead of a laptop computer.

Electrooculography is a bio-medical technique used for eye movements observations. It measures the resting potential of the retina. This potential comes from the fact that eye acts a dipole: it has a positive charge on the cornea (the front part of the eye) and a negative charge on the retina (the rear part of the eye). The measurements are conducted with electrodes applied onto the face skin. More important is the location of the electrodes which depends on the eye movements. If the patient’s gaze is in the horizontal direction, the electrodes have to be placed near the lateral canthi of both eyes. On the other hand, if eye movements are expected to vary in a vertical direction, then the electrodes have to be placed above and below the eye.

Figure 2.1Electro-Oculography method

It is particularly interesting that the constructors applied additional ultrasonic sensors into the wheelchair. These sensors, placed on the wheelchair body, send signal to detect obstacle and then receive the reflected wave. This back signal helps the microcontroller to choose the best path.

The researchers designed the interface in that way that gaze directions like left, right, forward or back correspond with internationally understood directions like north, west, east and south. No pulse means “centre”. The microcontroller of so called User Instructions Processor (UIP) encodes upcoming signals in order drive to in a certain direction or to avoid collision with an obstacle.

Finally, the tilt detection algorithm is realized by the gyroscope applied to Drive Control Module. It informs UIP about possible danger associated with breaking safe tilt limit, predefined for wheelchair. In this case, corrective measures is done by the user or the alert to the patient’s doctor/nurse is sent.

2.2. Head Gesture Recognition

This method, described in [2], allows to obtain images of human’s head in order to process them for certain commands. The equipment used for this application is called Gesture Cam. It is a Smart Camera which was modified with embedding a processing unit. This modification allows the device to process images at the high resolution. Moreover, due to this condition, the only mage feature that has to be processed, is the output of the camera.

GestureCam is based on Field-Programmable Gate Array (FPGA) which is supposed to process data from intensive video faster in real time than a traditional Personal Computer. Firstly, the authors took the video of the user. After that, they used Viola-Jones method in order to detect users’ faces.

Figure 2.2 Viola-Jones method used for detecting nose.

Figure 2.3Viola-Jones method used for detecting eyes

2.3. Anticipative Shared Control

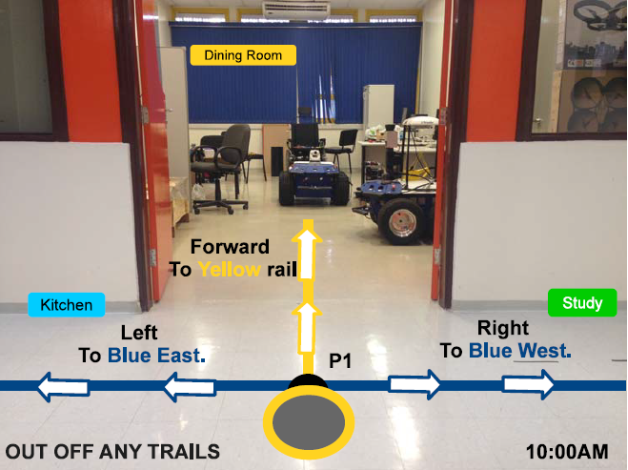

The researchers and at the same time the authors of presented a wheelchair navigation interface which enables the user to move from one room to another into an indoor place. The control over this interface is shared between the user and the computer. Therefore, it is called “Anticipative Shared Control” and the system POMDP (stands for Partially Observable Markov Decision Process).

Find Out How UKEssays.com Can Help You!

Our academic experts are ready and waiting to assist with any writing project you may have. From simple essay plans, through to full dissertations, you can guarantee we have a service perfectly matched to your needs.

View our academic writing services

The main impact of the computer is that it uses data based on human habits, visited paths, time of the day etc. On the other hand, user can decide whether he/she wants to choose particular path, suggested by the interface or change settings. The main purpose of these operations is to complete user’s intentions and expectations as full as possible without increasing effort while operating the wheelchair. Tha advantage of that solution is that the wheelchair can take the user for longer trip without requiring more gestures.

The authors notice that using interactive interfaces like eye-tracking or Electromyography (EMG) may be tiring for the human.

The proposed design of the wheelchair interface is to use make fusion of head gestures and eye-tracking and to add more functions like winking, baring teeth, raising eyebrows or

In this report various wheelchair interfaces were presented. The aim of their design is to improve life of persons who suffer from diseases causing.

As far as eye-tracking is considered, many papers regarding eye-tracking systems were published. Generally, the advantage of eye-tracking over voice command is that eye can send accurate visible signal, for example, by gazing in certain direction.

It is worth noting that eye-tracking method, described in , is very reliable. Moreover, it is simple to use in various applications. Because of the straightforward gaze detection, it is very reliable, accurate and, based on previous research, quite economic. The use of microcontroller reduces time of complex calculations, provides enough high level of intelligence and unweights the wheelchair because of replacing a laptop computer.

The head gesture interface is quite effective and economic. However, it faces different ambiance conditions (such as changes in illumination or various objects in the background) as well as user’s appearance (face complexion, or glasses).

|

[1] |

R. T. Bankar and Dr. S. S. Salankar, “Head Gesture Recognition System Using Gesture Cam,” in Fifth International Conference on Communication Systems and Network Technologies, Gwalior, India, 2015. |

|

[2] |

P. Pinheiro, E. Cardozo and C. Pinheiro, “Anticipative Shared Control for Robotic Wheelchairs Used by People with Disabilities,” in IEEE International Conference on Autonomous Robot Systems and Competitions, Vila Real, Portugal, 2015. |

|

[3] |

T. R. Pingali, S. Dubey, A. Shivaprasad, A. Varshney, S. Ravishankar, G. R. Pingali, N. K. Polisetty, N. Manjunath and Dr. K. V. Padmaja, “Eye-Gesture Controlled Intelligent Wheelchair using Electro-Oculography,” in IEEE International Symposium on Circuits and Systems (ISCAS), Melbourne, Australia, 2014. |

< >

Cite This Work

To export a reference to this article please select a referencing style below: